Jetson is an embedded AI computing platform launched by Nvidia. Compared to laptops and servers, Jetson has a smaller size and lower power consumption, making it suitable for deployment on edge devices. Oxford Nanopore Technology has also released MinION Mk1C based on the Jetson platform for real-time analysis of sequencing data. This article describes how to install and run Bonito Basecaller on Jetson for real-time sequencing data analysis and model training on edge devices.

This article is based on the Jetson Xavier NX development board running Jetson Linux 35.2.1. Other Jetson devices may require minor adjustments.

$ uname -r

5.10.216-tegra

$ nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2022 NVIDIA Corporation

Built on Sun_Oct_23_22:16:07_PDT_2022

Cuda compilation tools, release 11.4, V11.4.315

Build cuda_11.4.r11.4/compiler.31964100_0

Ensure CUDA Dependencies

Bonito Basecaller is a deep learning model based on CUDA, so it is necessary to ensure that CUDA dependencies are installed. During the installation of Jetson Linux using sdkmanager, you need to select the installation of the CUDA Toolkit to ensure that CUDA dependencies are installed. After entering the system, you can check the CUDA version using the following command:

$ nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2022 NVIDIA Corporation

Built on Sun_Oct_23_22:16:07_PDT_2022

Cuda compilation tools, release 11.4, V11.4.315

Build cuda_11.4.r11.4/compiler.31964100_0

Install Conda

To avoid conflicts with the system’s Python environment, we can use Conda to create an independent Python environment. First, we create a Conda environment:

conda create -n bonito-py38-081

conda activate bonito-py38-081

And install Python 3.8 in this environment:

conda install python=3.8

Install Bonito

Observing the requirements.txt file of Bonito, we can see that Bonito depends on the following Python libraries:

# general requirements

edlib

fast-ctc-decode

mappy

networkx # required for py3.8 torch compatability

numpy<2 # numpy~=2 is currently unreleased, it may have breaking changes

pandas<3 # pandas~=3 is currently unreleased, it may have breaking changes

parasail

pod5

pysam

python-dateutil

requests

toml

tqdm

wheel

# specific requirements

torch==2.1.2

# ont requirements

ont-fast5-api

ont-koi

ont-remora

After testing by the author, the packages that have compatibility issues on the arm64 architecture are mainly focused on torch and ont-koi. Among them, PyTorch requires the installation of the torch package provided by NVIDIA, and ont-koi requires the porting of the dorado koi library.

PyTorch

According to Nvidia’s documentation and compatibility matrix table, we can install version 2.1.0a which is closest to Pytorch 2.1.2 (Please select the appropriate PyTorch version according to the actual Jetpack Linux version):

# Download the PyTorch wheel file

wget https://developer.download.nvidia.com/compute/redist/jp/v512/pytorch/torch-2.1.0a0+41361538.nv23.06-cp38-cp38-linux_aarch64.whl

# Install numpy and pandas

pip install "numpy<2" "pandas<3"

# Install PyTorch

pip install torch-2.1.0a0+41361538.nv23.06-cp38-cp38-linux_aarch64.whl

After installation, you can use the following command to determine that PyTorch has read the Jetson’s GPU:

python3

>>> import torch

torch.cuda.get_device_name(0)

>>> torch.cuda.get_device_name(0)

'Xavier'

ont-koi

Compile so file

Since ONT only provides the koi library for the x86_64 architecture, we need to port the koi library to the arm64 architecture ourselves. After observation by the author, pre-compiled koi packages were found in Dorado’s Koi.cmake. We can directly download and compile the so file. We first download the koi package from ONT:

# Download the koi package

wget https://cdn.oxfordnanoportal.com/software/analysis/libkoi-0.4.3-Linux-aarch64-cuda-11.4.tar.gz

tar -zxvf libkoi-0.4.3-Linux-aarch64-cuda-11.4.tar.gz

Then, create a setup.py in the folder:

import os

from cffi import FFI

ffi = FFI()

def build_koi():

with open("include/koi.h", 'r') as f:

header_content = f.read()

ffi.cdef(header_content)

ffi.set_source(

'koi._runtime',

'#include <koi.h>',

include_dirs=['./include'], # include

library_dirs=['./lib'], # lib

libraries=['koi'], # link libkoi.a

extra_link_args=['-Wl,-rpath,$ORIGIN/lib']

)

ffi.compile()

if __name__ == '__main__':

build_koi()

And run the script. After compilation, we can find the _runtime.cpython-38-aarch64-linux-gnu.so file in the current directory.

Install ont-koi

Since the koi architecture is different, we need to install ont-koi manually:

# We first download the x86_64 architecture whl package

wget https://files.pythonhosted.org/packages/fe/68/4bb9241c65d6c0c51b6ae366b5dedda2e8a41ba17d2559079ee8811808ef/ont_koi-0.4.4-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

# Unzip the wheel file (a wheel is essentially a zip file)

unzip ont_koi-0.4.4-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl -d ont_koi_temp

# Find the Python site-packages directory

SITE_PACKAGES=$(python -c "import site; print(site.getsitepackages()[0])")

# Copy the files directly to site-packages (but be sure to back up first)

cp -r ont_koi_temp/koi $SITE_PACKAGES/

cp -r ont_koi_temp/ont_koi.libs $SITE_PACKAGES/

cp -r ont_koi_temp/ont_koi-0.4.4.dist-info $SITE_PACKAGES/

# Replace the so file

cp /path/to/your/new/_runtime.cpython-38-aarch64-linux-gnu.so $SITE_PACKAGES/koi/_runtime.abi3.so

Install Bonito

We first download the Bonito source code:

git clone https://github.com/nanoporetech/bonito

cd bonito

Comment out the dependencies of torch and ont-koi in requirements.txt, and then install Bonito:

pip install .

Run Bonito

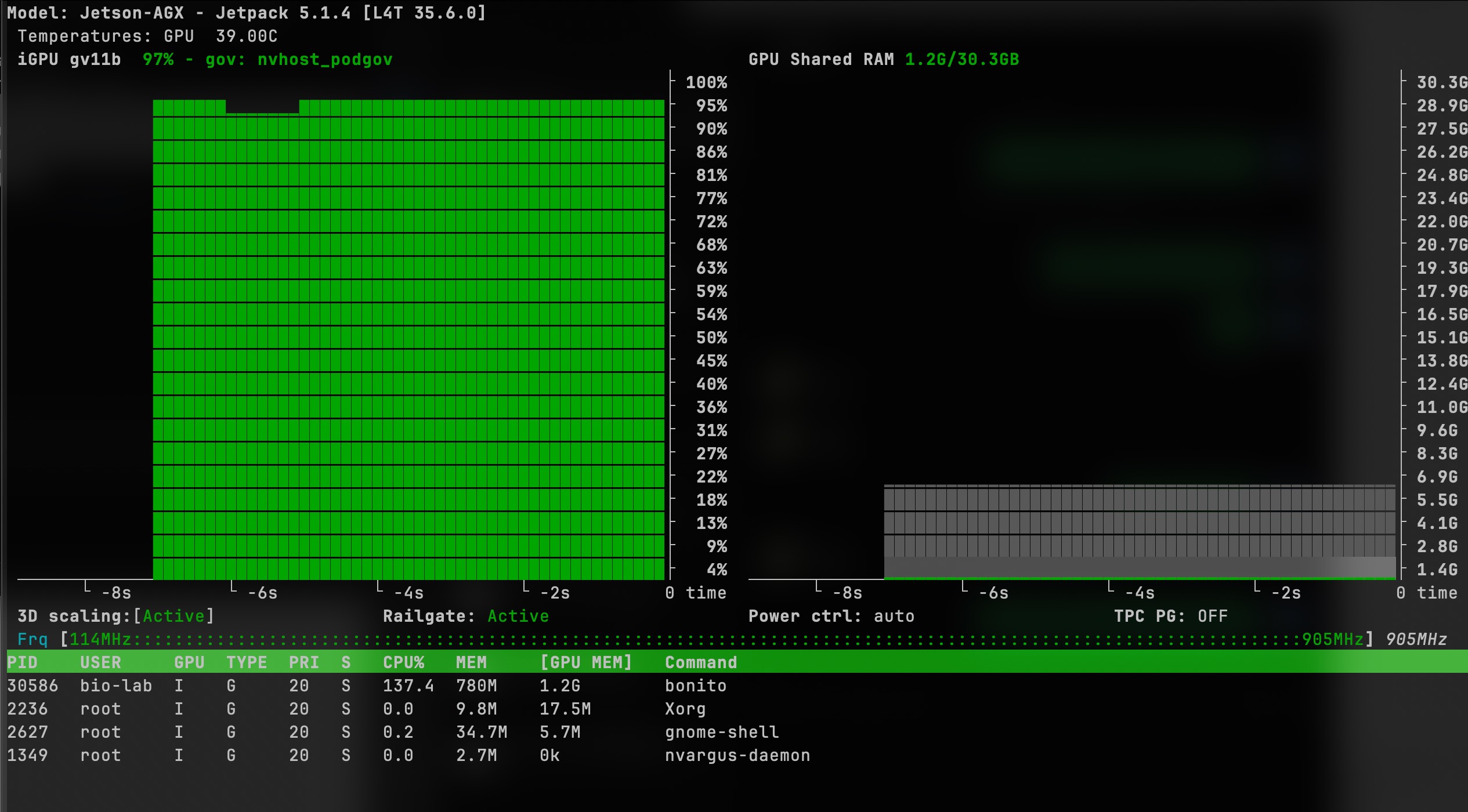

bonito basecaller [email protected] --reference reference.mmi /data/reads > basecalls.bam